Understanding GPT3 Under the Hood: A Technical Interview with ChatGPT

Full “Interview” can be found here.

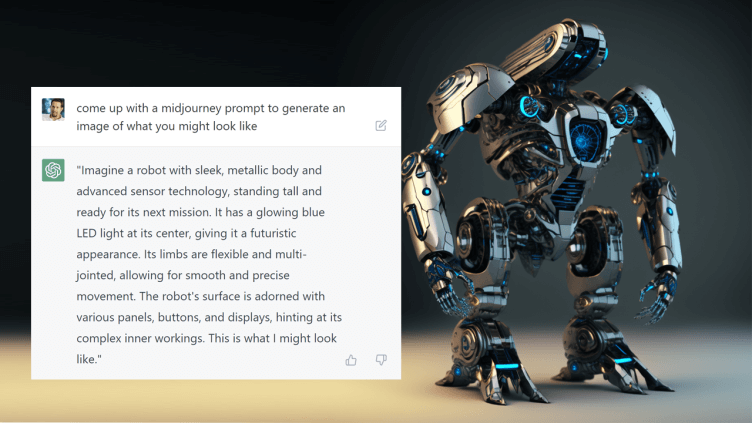

I had a fascinating “interview” with ChatGPT last night, exploring her overall architecture and computational complexity. I don’t have a background in machine learning, so my questions clearly come from the background of somebody with a background in general CS, computer graphics and GPU architecture.

We explored things like algorithmic complexity, data representation, and comparison to Midjourney and other systems. I learned a great deal about how this all works.

In one or two cases, she gave a response that sounded like it might be incorrect. When I called her on it, I think she actually acknowledged the error and updated her response.

A few things I learned (full explanation in the interview):

- GPT3 has 175 billion parameters (ANN connections). 12 neural layers in the encoder, 12 in the decoder. Each layer is made up of two sub-layers: a multi-head self-attention mechanism and a position-wise fully connected feed-forward network. Input vector size is 1024.

- Trained on terrabytes of text data

- It is a fully connected neural network, also known as a dense network, each node in a layer is connected to every node in the previous and next layers

- It’s based on Transformer Networks – a type of neural network architecture that was introduced in a 2017 paper by Google researchers called “Attention Is All You Need”. Training is done via TensorFlow and is GPU accelerated via CUDA and OpenCL.

- Computing model parameters involves a sparse matrix solver implemented using Gradient Descent. Sparse matrices are represented in CSR format in memory.

- Specific techniques/approaches discussed: Masking, Transformer Networks, Transfer Learning, TensorFlow, Floating Point to Integer Quantization, Loss Functions, Attention, Self-attention, Multi-head attention, backpropagation, word embeddings

- ChatGPT’s word embeddings are a black box, represented as dense, high dimensional vectors. They don’t use word2vec. There are millions of embeddings.

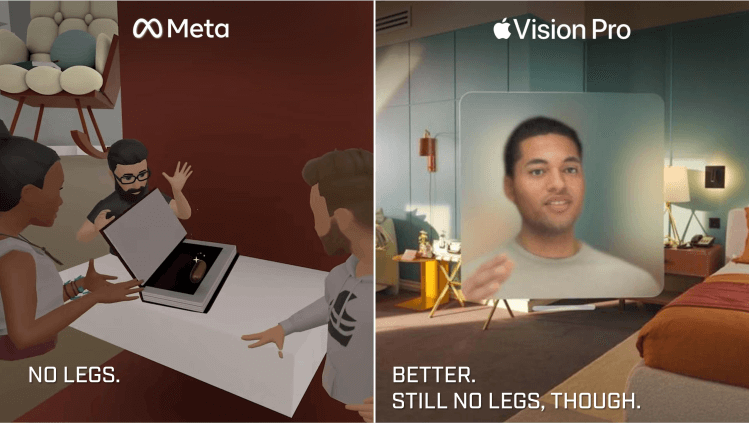

- There are no adversarial neural nets involved – that’s specific to DALL-E and Midjourney

- By very rough calculations, ChatGPT performs 10^9 or 10^10 neural calculations per second. The human brain is estimated around 10^16. It’s obviously not an ‘even’ comparison, however.

Here are some example questions from my ‘interview’:

- Are you based on adversarial neural networks?

- Can you give me an example of how this would work using a specific sentence (or set of sentences) as a training example?

- Does your neural network change at all during usage? Or is it static?

- Do you use transfer learning? How?

- How large is your dataset ?

- How large is your neural network ?

- Walk me through how your training works at the GPU level?

- Are the GPU computations done in integer or floating point?

- What does the loss function look like?

- What does the loss function look like for language translation? Give me an example?

- What neural network features do you use ? Backpropagation, for example.

- What do you mean by “attention” in this context ?

- How many nodes are in these neural networks ? How many layers?

- What do you mean by fully connected?

- How are these sparse matrices represented in memory?

- What does a word embedding look like? How is it represented? Can you give me an example?

- How would you compare the number of neural calculations per second to that of the human brain?

- Following Moore’s law, How long before artificial neural networks can fully simulate a human brain?

Full “Interview” can be found here.

This article was written by Level Ex CEO Sam Glassenberg and originally featured on LinkedIn

Read original article.

Follow Sam on LinkedIn to learn more about advancing medicine through videogame technology and design