By Eric Gantwerker, MD, MMSc(MedEd), FACS (Level Ex VP, Medical Director)

We have witnessed a huge shift in knowledge access over the previous several decades. What was formerly exclusively available in libraries and professors’ brains is now available on the devices that everyone has in their pockets at all times. As a result of the digital age, knowledge shortfalls can now be corrected promptly with Just-in-Time access to content and facts. However, there is still a need for learners to acquire foundational knowledge and conceptual understanding in order to integrate new information into their knowledge base.

A Post-Pandemic Education Landscape

COVID-19 undoubtedly changed the landscape of education at every level, as schools scrambled to create online learning platforms to address the massive in-person learning losses. This was no different in healthcare education where training was heavily predicated on in-person experiential learning opportunities. Even simulation suffered during the pandemic, as centers were closed and access to hardware-based learning resources grinded to a halt.

Through the pandemic’s forced closures and cancellations, we realized the higher cost, lower access, and chance that exists with in-person experiential learning. Higher expense and time investment for clinicians to travel and participate in in-person opportunities, less access for those with financial or geographical limitations, and the reliance on chance dictating the learning opportunities for patient-based training.

As the new normal sets in post-pandemic, educational leaders are analyzing the ways that asynchronous and synchronous, remote learning opportunities can transform education by replacing and augmenting in-person, synchronous learning experiences. This means taking advantage of the Just-in-Time learning, lower cost, and higher touchpoints afforded by mobile and software-based solutions to impart knowledge and sharpen skills.

The future is hybrid learning for both medical and surgical education. We have realized the learning curve for any cognitive or psychomotor skill is most efficiently learned through a combination of asynchronous, synchronous remote components, and synchronous, in-person elements. If we can get learners higher on the learning curve prior to the high cost, low access touchpoints, we maximize the efficiency of learning. This asynchronous learning should be available when clinicians need it, on the device they already have.

The Value of Self-Regulated Learning

In education, we discuss the concept of self-regulated learning (SRL), which refers to how highly-motivated learners take command of their own learning and go through to identify their own knowledge and skill gaps, actively address them, receive feedback, and continue along as they address learning deficits.

In general, highly motivated and astute learners, such as astronauts, just need access to materials—without oversight or faculty—to address those deficits. As astronauts train for missions, their training schedule is jam-packed, and only a small fraction of that time is devoted to learning about medical emergencies and procedures.

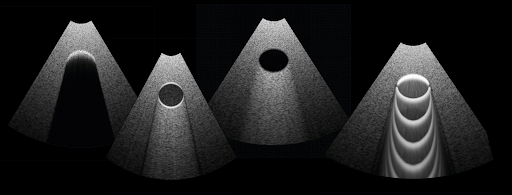

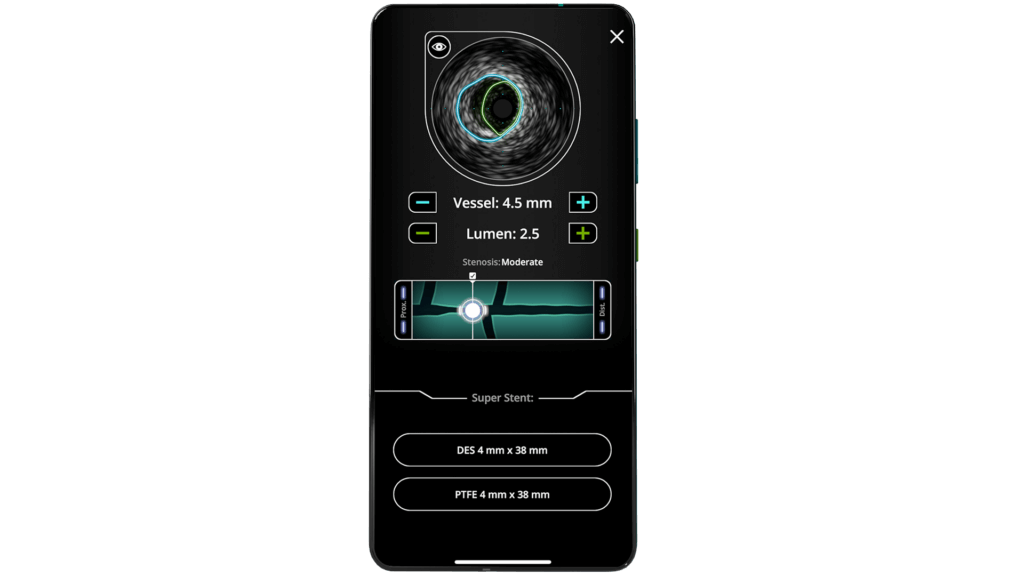

Typically for near space missions, crews have access to flight surgeons on the ground to guide them through any medical scenario—but what happens when they are on a deep space mission to Mars where the communication delay is 20 minutes each way? The emergency may have already played itself out by the time communication to the ground and back has happened. So astronauts need Just-in-Time, efficient mechanisms to quickly train on how to evaluate and treat these emergencies, even if it’s just a refresher for them. This includes cognitive (what is the diagnosis?), as well as psychomotor tasks, (how do I perform an ultrasound, and what am I looking at?).

To meet this need, Level Ex developed a virtual training platform for space crews centered on this Just-in-Time training approach. Building on our prior work and in collaboration with the Translational Research Institute for Space Health (TRISH) and KBR, we built a solution for the upcoming Polaris Dawn mission consisting of two parts—both aimed at enabling astronauts to better monitor their health and maximize their safety in space.

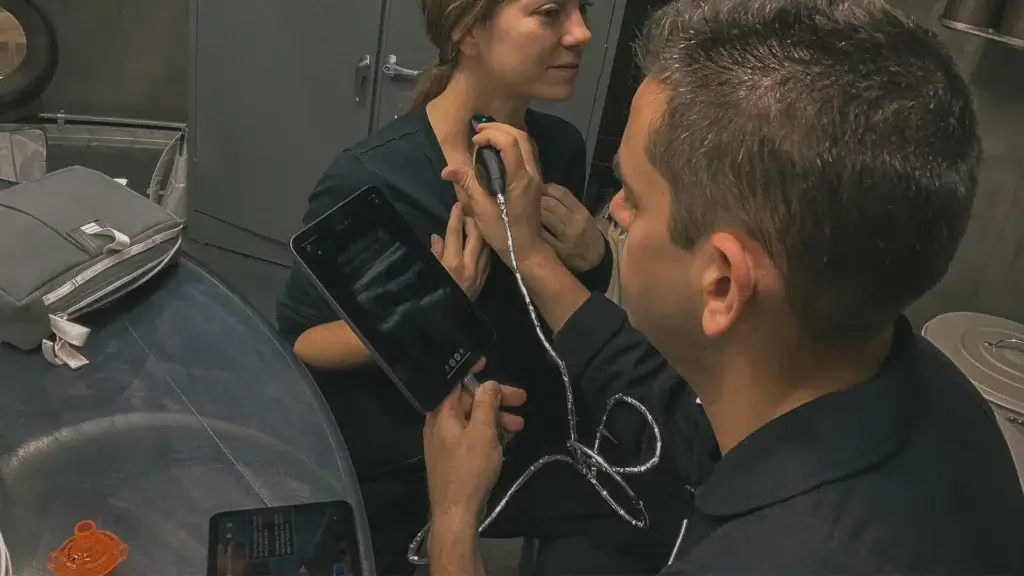

Our pre-flight orientation and training guide teaches the crew how to use a handheld Butterfly iQ+ device for ultrasound imaging that will be onboard the spacecraft. During their 5-day orbit mission, the crew will use Just-in-Time training and procedural guidance that Level Ex created to perform the ultrasound procedures on themselves and collect data. The crew will be tracking their blood flow patterns daily to learn more about how the zero gravity environment influences the human body. This experiment will also test the efficacy of using virtual training solutions like video games for Just-in-Time training on medical technology and procedures.

Polaris Dawn crew members practice using a handheld Butterfly iQ+ device for ultrasound imaging. The Polaris Dawn mission is slated to launch in 2023.

Training the Mind, Without the Medical Device

Many may wonder how they’re going to learn a psychomotor/technical task without a specific medical device in their hand. The answer depends on whether they are a novice or expert in that task. If they are a novice, the first parts of any procedure are knowing:

- The context (where am I, and what I am trying to do?)

- The specific parameters of the equipment they are using (what does this button do?)

- The steps of the procedure

- How to analyze what they see

- How to physically perform the task

Many of these are actually cognitive in nature, meaning the learner doesn’t need the actual medical device in hand. Oftentimes, not having the medical device in hand actually optimizes the cognitive load so they can focus on the elements without fidgeting with the device itself.

Too often, however, this part is done through passive didactics and endless reading of manuals and documents. But there is a better way. Having a meaningful, interactive experience on their own device can create this opportunity to learn. This is not to say they never need to train with the specific medical device, but if they already have a strong understanding when they do have the device in hand, they can focus on enacting the strategy they have already created through countless cycles of trial and error beforehand.

Even force feedback, the simulation of real-world physical touch, has a significant visual component that your brain processes more than tactile feedback. For example, think of a video of a rubberband around a watermelon about to burst, the viewer perceives there is force without actually touching the watermelon.

Expert learners can also do a fair amount of training on the medical device before having it in-hand. Again, one needs to orient to what is different from prior experience and understand the strategy and approach. This is why “how I do it” videos are so popular among surgeons, because they can simply watch the video and then go to the bedside and enact that strategy. Their expert eyes easily see changes in patterns and integrate them into their knowledge base. They will need the physical device at some point, but by that time, they will have already run through the procedure in their head hundreds of times.

Regardless of their experience level, training beforehand on their own time, with their own device is a much lower cost and higher frequency touchpoint as compared with any in-person lab or cadaver workshop. If the learner is well-trained on the cognitive components of the procedure, they can focus all their attention on actually holding the device and the mechanical and technical aspects of the procedure. This will limit the time needed to learn in-person, reduce the watching of boring lectures and reading technical guides, and drill into them what they need to know, when they need it.

Such self-directed, Just-in-Time training maximizes efficiency of learning any new device or technique, equaling better quality of learning at lower cost. It’s a win-win.

Interested in learning more about Level Ex’s technology and how it’s accelerating medical device training and adoption? Contact us.